Stock Monitoring: How Real-Time Competitor Tracking Increased Sales by 30%

I still remember the first call with this client. They sold electronics online laptops, monitors, gaming gear, the works and they had a theory that honestly sounded a bit paranoid at first. "Every time our competitors run out of stock," the founder told me, "someone's making money. And it's not us."

He wasn't wrong, though. When a shopper searches for a specific laptop model and finds it unavailable at Store A, they don't just give up. They move to Store B, then C, then whoever still has it in stock. The first store to know about those stockouts and react quickly gets the sale. It's obvious when you think about it, but most retailers weren't actually watching their competitors' inventory in real time.

That conversation led to one of the more interesting web scraping projects we've done at Let's Scrape. It wasn't about prices this time. It was about availability, timing, and moving fast when opportunities appeared.

About the client and their world

The client was a mid-sized online electronics retailer, not a household name but solid in their niche. They'd been in business for about eight years, profitable but growing slowly. Their challenge wasn't product selection or pricing they were competitive on both. The issue was visibility and timing.

In e-commerce, especially in electronics, margins are thin. A laptop that costs them €800 might sell for €850. They're not making a fortune per unit, so volume matters. And volume comes from being visible at the right moment, when demand is high and supply elsewhere is limited.

They'd noticed patterns. Certain popular products would suddenly sell better on random Tuesdays or Thursdays with no obvious reason. After some digging, they realized those spikes often coincided with competitors running out of stock. Shoppers were searching, not finding what they wanted at the usual places, and landing on their site instead.

But this was reactive insight, discovered weeks later in analytics. What they wanted was to know in real time, so they could capitalize immediately.

The problem: knowing when competitors stumble

Honestly, when they first approached us, I was a bit skeptical about the scope. They wanted to monitor stock availability for about 400 products across five competitor websites, multiple times per day. Not once a day several times. Because in electronics, stockouts can happen quickly, especially for popular models during launch periods or holiday seasons.

They didn't just want a spreadsheet at the end of the week. They needed instant alerts. "If Competitor X runs out of the new gaming laptop at 2 PM on a Wednesday, I want to know by 2:15 PM so I can launch a banner by 2:30 PM," their marketing director explained.

At this point we thought: this is either brilliant or overthinking. Turns out it was brilliant, but getting there took some work.

The technical challenge was straightforward on paper check product pages, parse availability status, compare to previous state, send alerts when status changes. But the execution had layers. Competitor websites aren't designed to be scraped. They don't have nice APIs saying "in stock: yes/no." Availability might be indicated by a button that says "Add to Cart," or "Out of Stock," or "Pre-Order," or "2-3 week delivery," or "Temporarily Unavailable," or a million other variations.

And they change their site layouts. One week the availability indicator is a green badge, next week it's buried in JSON inside a JavaScript block. Building a robust stock monitoring system meant building something that could adapt and wouldn't break the moment a competitor redesigned a button.

What we built: a monitoring system that actually worked

We started with a proof-of-concept focused on one competitor and 50 products. The goal was to show them real alerts within 72 hours, which is how we usually validate feasibility before committing to full-scale data extraction.

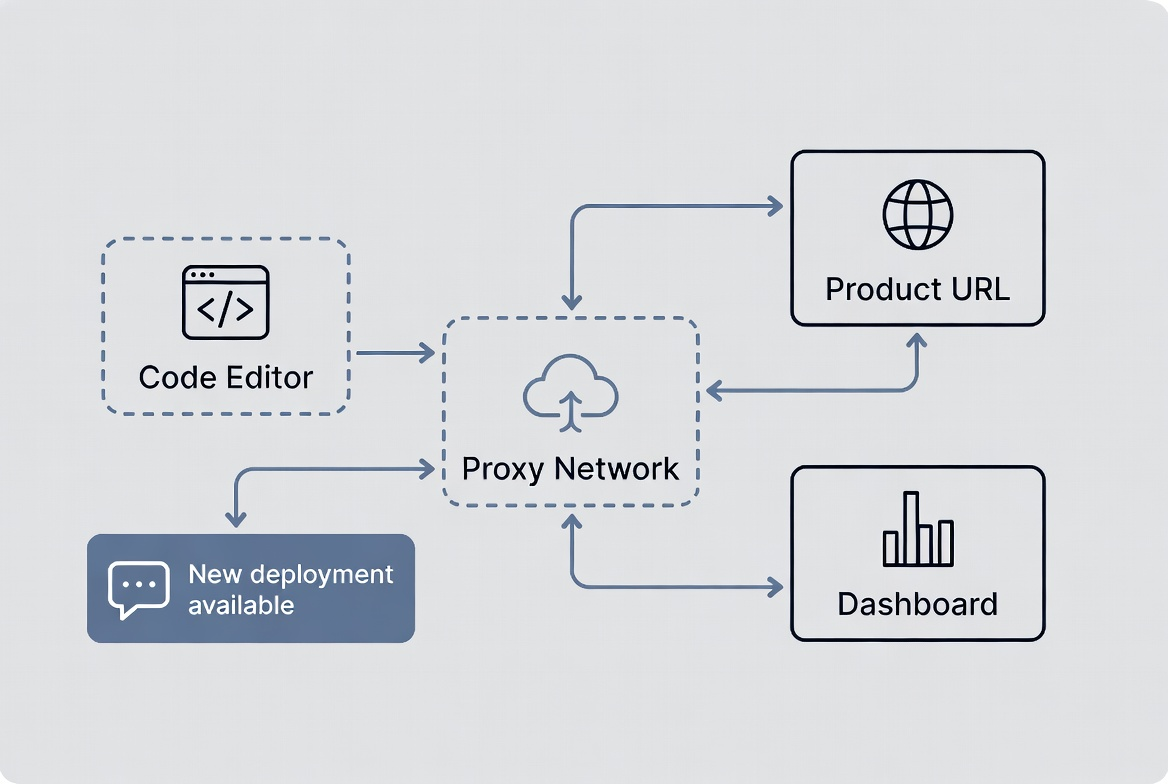

The core scraper was Python-based, running on our infrastructure with rotating proxies to avoid detection or rate limiting. For each product, we stored the competitor's product URL and built custom parsing logic to identify availability status. Some sites made it easy-a clear "Out of Stock" label in HTML. Others required checking if the "Add to Cart" button was disabled, or parsing structured data markup, or even interpreting stock level hints like "Only 2 left."

Looking back, I'm glad we started small. The first version worked but was fragile. If a competitor's website had a temporary glitch or showed a weird cached state, we'd send a false alert. That's annoying. Imagine getting an urgent notification that your competitor is out of stock, scrambling to launch a promotion, only to discover thirty minutes later they actually had stock all along. Not great.

So we added validation layers. The scraper would check each product page twice, five minutes apart, before confirming a stockout. If both checks showed "out of stock," then-and only then-would the system fire an alert. We also built in anomaly detection: if suddenly 50 products at one competitor all showed out of stock simultaneously, it was probably a site-wide technical issue, not a real stockout event. The system learned to hold those alerts and verify.

For delivery, we set up a real-time dashboard showing current stock status across all competitors, color-coded by product category. Green meant in stock everywhere, yellow meant some competitors out, red meant most competitors out (prime opportunity). We also configured Slack alerts-instant messages to their team whenever a high-priority product went out of stock at a competitor.

The system ran checks every four hours for most products, but every two hours for their top 50 revenue-driving items. They could also trigger manual scans if they wanted to check something immediately.

Quality was critical. We implemented schema validation to ensure every scraped data point matched expected formats, deduplication to avoid redundant alerts, and automatic retries if a scrape attempt failed. Our infrastructure-10 servers, 195+ proxies handling 650K+ monthly requests across all client projects-gave us the reliability needed for time-sensitive competitor analysis.

Behind the scenes: the stuff that almost broke us

This part was trickier than expected. One of the competitors used aggressive bot detection. Our scraper kept getting blocked, even with rotating proxies. We spent two days debugging, trying different approaches-headless browsers, different user agents, timing variations. What finally worked was slowing down. We added randomized delays between requests and made the scraper's behavior more human-like: scrolling the page, hovering over elements, waiting a few seconds before extracting data. It felt inefficient, but it worked.

Another challenge was interpreting pre-order status. One competitor listed products as "available for pre-order" when they were technically out of current stock. Did that count as out of stock? We went back to the client. Turns out yes-for their purposes, pre-order meant the item wasn't shippable today, which created an opportunity. We updated the logic.

Honestly, what surprised us was how often competitors' websites just broke. Not from our scraping-they had their own issues. Pages would load with missing price information, or show error messages, or display "null" where availability should be. We had to build resilience into the system to distinguish between "website is broken" and "product is genuinely unavailable."

I still remember the moment we almost sent 200 false alerts. One competitor temporarily took down their entire catalog for maintenance at 3 AM. Our scraper dutifully checked, saw everything unavailable, and queued up alerts for every single monitored product. Thankfully we'd implemented that anomaly detection I mentioned-the system paused, flagged it as suspicious, and waited. By 4 AM the competitor's site was back up, crisis avoided.

These weren't failures, really. They were learning moments. Each edge case made the system smarter.

What we discovered in the data

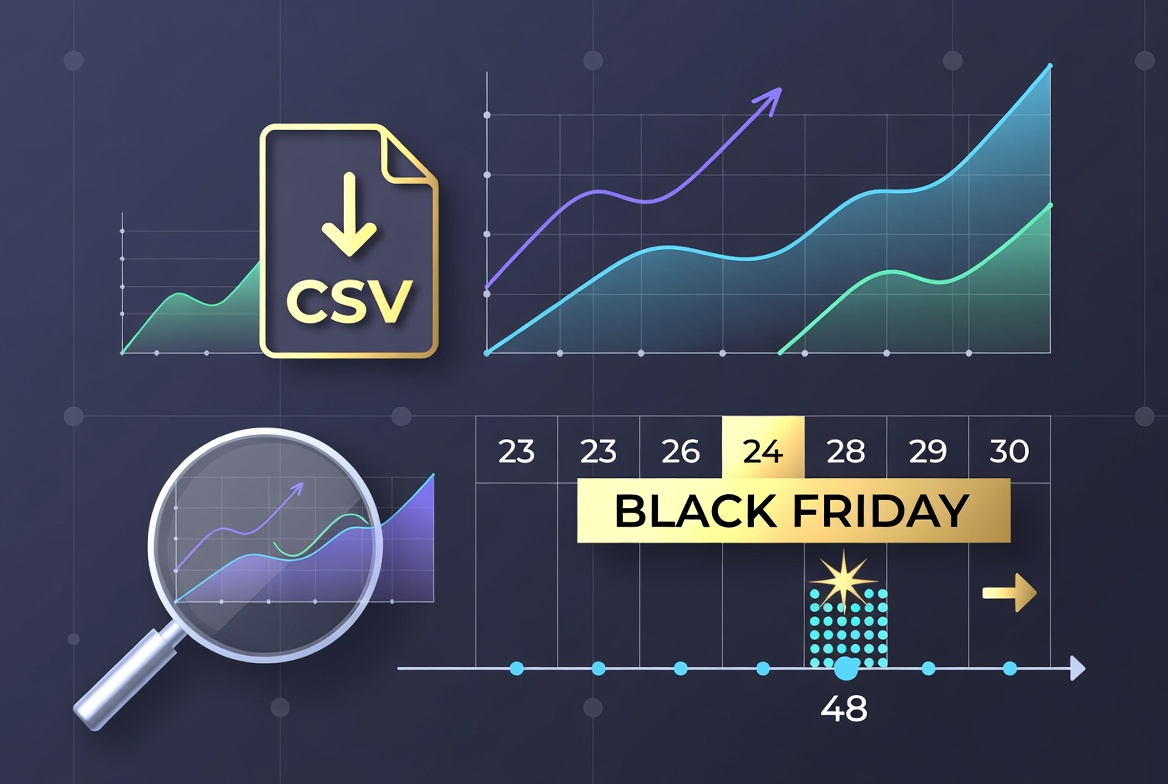

Once the system was running smoothly, the patterns that emerged were fascinating. We exported the data weekly-CSV files with timestamps, product IDs, competitor names, and stock status changes. The client's team started analyzing it like market intelligence, because that's exactly what it was.

First discovery: stockouts weren't random. Certain competitors consistently ran out of high-demand products faster than others, probably because they ordered less inventory to minimize risk. That was useful information. It meant predictable opportunities.

Second: stockouts often happened in clusters. If Competitor A ran out of a popular laptop model, Competitors B and C usually ran out within 48 hours. That made sense-they were all sourcing from similar supply chains. But it also meant the window to capitalize was short. By the time most competitors were out, the client needed to already be promoted and visible.

Third, and this genuinely surprised everyone: weekend stockouts were gold. Most competitor marketing teams didn't work weekends, so if a product went out of stock Saturday morning, it often stayed out until Monday. But shoppers still browsed and bought on weekends. The client started scheduling weekend monitoring more aggressively and saw conversion spikes.

We also found seasonality. In the lead-up to Black Friday, stockouts surged. Competitors underestimated demand, or held back inventory for the actual sale day, and gaps appeared everywhere. The client used those weeks to push their availability hard-"In Stock Now" badges everywhere, fast shipping promises, the works.

What made this more than just data collection was the interpretation. They weren't just passively observing; they were using inventory monitoring insights to make active decisions.

Business results: turning alerts into revenue

Here's where it got real. Within the first month, the client ran a controlled experiment. When they received a stock alert for one of their top 20 products, they'd immediately update their homepage to feature that item with an "Available Now-Others Out of Stock" message. They also pushed that product in their email newsletter and adjusted Google Shopping ads to boost its visibility.

The results? Measurable uplift. On days when they actively promoted based on competitor stockouts, sales for those specific products increased by 20-30% compared to baseline. Not huge, but very real. Over a quarter, that added up to tens of thousands of euros in additional revenue from better timing alone.

Even more valuable was the strategic insight. Their head of procurement started using the stock monitoring data to make buying decisions. If competitors were frequently running out of a product category, it signaled strong market demand. They'd order more inventory in those categories, confident they could move it.

They also got smarter about marketing spend. Instead of running generic ads all the time, they'd increase ad budgets for products where competitors had stockouts. Why compete on equal footing when you can advertise during moments of scarcity? The return on ad spend improved noticeably.

The system essentially gave them a timing advantage. They were reacting to market conditions-specifically competitor supply gaps-faster than anyone else in their space. It's the kind of edge that's hard to quantify in a single metric, but you feel it in the overall momentum of the business.

And honestly, the team loved it. The alerts created a sense of urgency and opportunity. When a Slack notification popped up-"Competitor X out of stock: Gaming Laptop Model Y" it felt like spotting an open goal. They'd scramble, adjust, and often see results within hours.

Reflections: what we learned as consultants

This project reinforced something we'd suspected but hadn't fully proven: in e-commerce, timing is underrated. Everyone obsesses over price monitoring and matching competitor prices to the cent. That matters, but availability might matter more. If you're in stock and they're not, you can even charge a bit more and still win the sale.

It also taught us that automation isn't just about efficiency it's about speed. Sure, the client could have manually checked competitor websites every day. But by the time a human noticed a stockout, compiled a list, got approval to update marketing materials, and pushed changes live, hours had passed. Competitors might have restocked. Shoppers might have already gone elsewhere. Real-time alerts collapsed that timeline to minutes.

From a technical perspective, this was a reminder that reliability beats sophistication. We could have built something incredibly fancy with machine learning to predict stockouts before they happened. But what the client actually needed was a simple, rock-solid system that checked pages, caught changes, and sent alerts without fail. We delivered that, and it was enough.

I'm also glad we pushed back on some early ideas. The client initially wanted us to scrape competitor warehouse data or supplier information to predict stock levels. That would've been invasive, possibly unethical, and definitely outside the scope of public web data. We stuck to what was publicly visible on product pages-information any shopper could see-and that turned out to be plenty.

One last thing: this project worked because the client had the operational maturity to act on the data. We've done price monitoring projects where clients collected data beautifully but never actually used it to change anything. Data without action is just noise. This client had processes in place-clear ownership, fast decision-making, willingness to experiment. That's what turned web scraping into business value.

Final thoughts: there's probably a gap you're not watching

If you're in e-commerce, you're already watching your own inventory obsessively. You know exactly when you're running low, when to reorder, how fast products move. But do you know when your competitors run out? Do you know which products are frequently unavailable across the market, signaling unmet demand?

Most companies don't. They're flying blind on one of the most actionable signals in their industry. Stock availability is public information-it's right there on product pages for anyone to see. The trick is watching systematically, across dozens or hundreds of products, continuously, and reacting quickly.

That's where automation and web scraping make sense. Not because it's trendy or complicated, but because it solves a real timing problem that humans can't solve manually at scale.

We're biased, obviously-this is what we do at Let's Scrape. But after working on projects like this, I genuinely believe most e-commerce companies are sitting on opportunities they just haven't noticed yet. Competitor stock monitoring was one. There are others: review sentiment tracking, pricing pattern analysis, market trend detection in job postings or real estate listings. The web is full of public data that, when collected and structured properly, tells you things worth knowing.

If you're curious whether there's a data opportunity hiding in your market, it's worth exploring. Maybe it's stock levels. Maybe it's something else entirely. Either way, the first step is just asking: what would I do differently if I knew X in real time? If you have a good answer to that question, the rest is just execution.

And execution is the part we enjoy. We've built the infrastructure, refined the processes, learned from the edge cases. We know how to turn messy website HTML into clean datasets, how to monitor changes reliably, how to deliver data in formats that actually integrate into your workflows. We've done this enough times that the 24-72 hour proof-of-concept timeline isn't a marketing claim-it's just how long it takes us to validate what's possible.

If this story resonated, or if you're dealing with something similar in your industry, feel free to reach out. We're at letsscrape.com, and honestly, we just like talking through these problems. Sometimes a 30-minute conversation is enough to clarify whether data extraction makes sense for your situation. No pressure, no hard sell. Just a conversation between people who find this stuff interesting.

Because at the end of the day, that's what we are people who get genuinely excited when a scraper catches a stockout at 2:13 PM and a client launches a promotion by 2:28 PM and sees orders roll in by 3 PM. It's a small thing, but it feels good. That's the kind of work we like doing.

FAQ

1. How does competitor stock monitoring actually work?

Competitor stock monitoring uses web scraping technology to automatically check product availability on competitor websites multiple times per day. The system visits product pages, extracts availability status (in stock, out of stock, pre-order, etc.), compares it to previous checks, and sends instant alerts when status changes. It's like having someone manually check hundreds of competitor products every few hours, but automated and instantaneous.

2. Is it legal to scrape competitor websites for stock information?

Yes, when done properly. Stock availability is publicly visible information that any shopper can see. We only scrape data that's accessible without logging in or bypassing security measures. It's the same information you'd see by visiting the website yourself we just do it systematically and at scale. That said, it's important to respect robots.txt files, use reasonable request rates, and follow ethical scraping practices.

3. How quickly can a stock monitoring system be set up?

Our typical proof-of-concept timeline is 48-72 hours. That includes setting up scrapers for initial competitors, testing reliability, and delivering first real alerts. Full deployment with all products and competitors usually takes 2-4 weeks, depending on complexity and number of sites being monitored. The key is starting small to validate value before scaling.

4. What happens if a competitor changes their website design?

This is why robust monitoring systems need maintenance. When competitors redesign their sites, parsing logic may need updates. We build scrapers with flexibility in mind and monitor for anomalies that might indicate layout changes. Most clients opt for ongoing maintenance where we handle updates proactively, ensuring continuous reliability without interruption.

5. How much does competitor stock monitoring cost?

Costs vary based on scope: number of products monitored, frequency of checks, number of competitors, and complexity of target websites. A typical setup for 400 products across 5 competitors with checks every 2-4 hours might range from $500-2000/month, depending on technical requirements. The ROI usually justifies the investment quickly our client saw returns within the first month.

6. Can this work for industries outside of electronics?

Absolutely. Any industry where stock availability impacts buying decisions can benefit: fashion, home goods, automotive parts, toys, sporting goods, beauty products, and more. The underlying principle is the same knowing when competitors can't fulfill demand gives you a timing advantage to capture those sales.

7. What if competitors use JavaScript to load availability information?

Many modern websites use JavaScript to dynamically load content. Our scrapers can handle this using headless browsers that render JavaScript just like a regular browser would. It adds complexity and can slow down scraping, but it's entirely feasible and something we deal with regularly.

8. How do you prevent false alerts from temporary website glitches?

We use multiple validation layers: checking each product twice with a delay between checks, implementing anomaly detection for site-wide issues, and building in logic to distinguish between genuine stockouts and technical errors. This significantly reduces false positives while maintaining alert speed.

9. Can the system track more than just in-stock vs. out-of-stock?

Yes. Advanced monitoring can track stock levels ("Only 2 left"), delivery timeframes ("Ships in 2-3 weeks"), pre-order status, regional availability differences, and more. The level of detail depends on what information competitors display publicly and what's most valuable for your business decisions.

10. What's the best way to act on stock monitoring alerts?

Speed matters. Our most successful clients have processes in place: clear ownership of alerts, pre-approved response templates, ability to quickly update homepage banners or product featured positions, and flexibility to adjust ad spend. The goal is to go from alert to action in under 30 minutes for high-priority products.